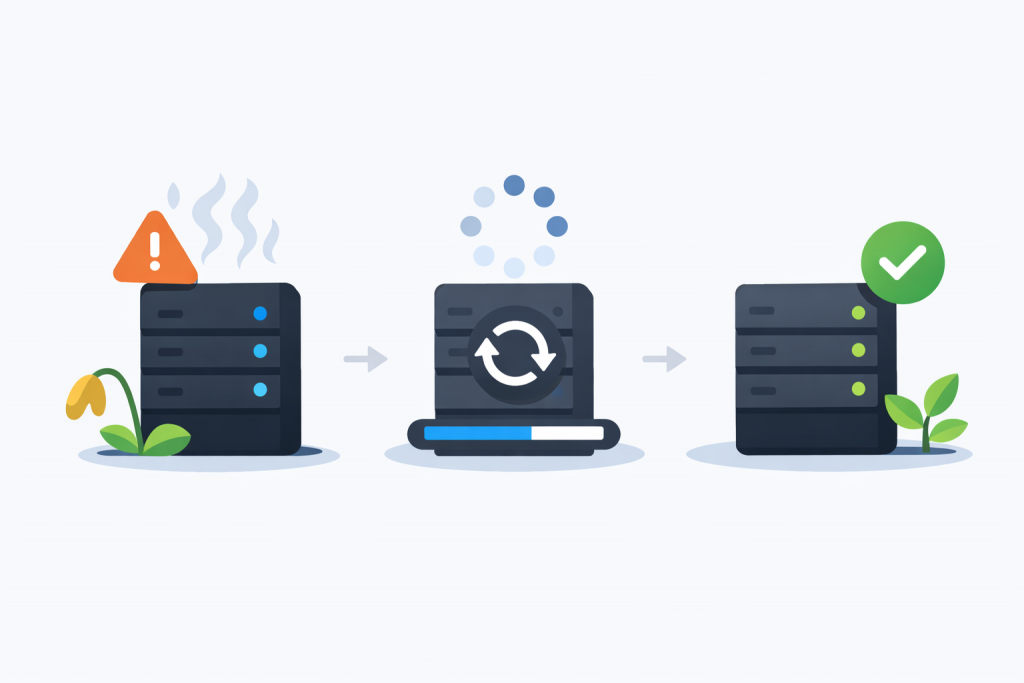

A server is not just a computer that runs without interruption. It is a complex system designed for constant load, request processing, data storage, and stable 24/7 operation. During operation, all of its components generate heat, and this process is completely normal. Problems begin when heat is not dissipated properly. Improper cooling gradually but steadily shortens the lifespan of a server, even if it appears to continue working without failures.